Were thrilled to announce that the ability to access AWS S3 data on databricks Azure via Unity catalog It is now generally available to allow the cross -cloud data management. As the only unified and open management solution for all data and first AI, the Unity catalog seizes organizations to control data, whether it lives, and ensures safety, compliance and interoperability across clouds. Thanks to this version, the teams can directly configure and ask the data AWS S3 from the Azure databricks without having to migrate or copy data sets. This makes it easier to standardize principles, access controls and audit controls within the ADL and S3 storage.

We will include two key topics in this blog:

- As the Unity catalog allows you to manage the data cloud data

- How to access and work with AWS S3 data from Azure Databricks

What is across the cloud data of the governe in the Unity catalog?

Since businesses accept hybrid and cross architecture, they often face fragmented controls of access, inconsistent security policies and duplicated management processes. This complexity increases the risk, increases operating costs and slows innovations.

Cross -cloud data management with the Unity catalog simplifies this by expanding the only model of permission, centralized sweeping policies and comprehensive audit across data stored in multiple clouds such as AWS S3 and Azure Data Lake Storage, all managed from the Databricks platform.

The key advantages of using the Cross Cloud data in the Unity catalog include:

- United administration – Manage access policies, security checks and compliance standards from one place in juggling Shelled Systems

- Without a fraction Access to data without a fraction – Safely discover, ask and analyze data across clouds in a single workspace, eliminate silo and reduce complexity

- Stronger safety and compliance with regulations – Centralized visibility, marking, line, data classification and auditing across all cloud storage

By relocating administration across clouds, Unity catalog provides teams with the only one, secure interfaces for managing and maximizing the value of all their data and fires AI – Userver live.

How does it work

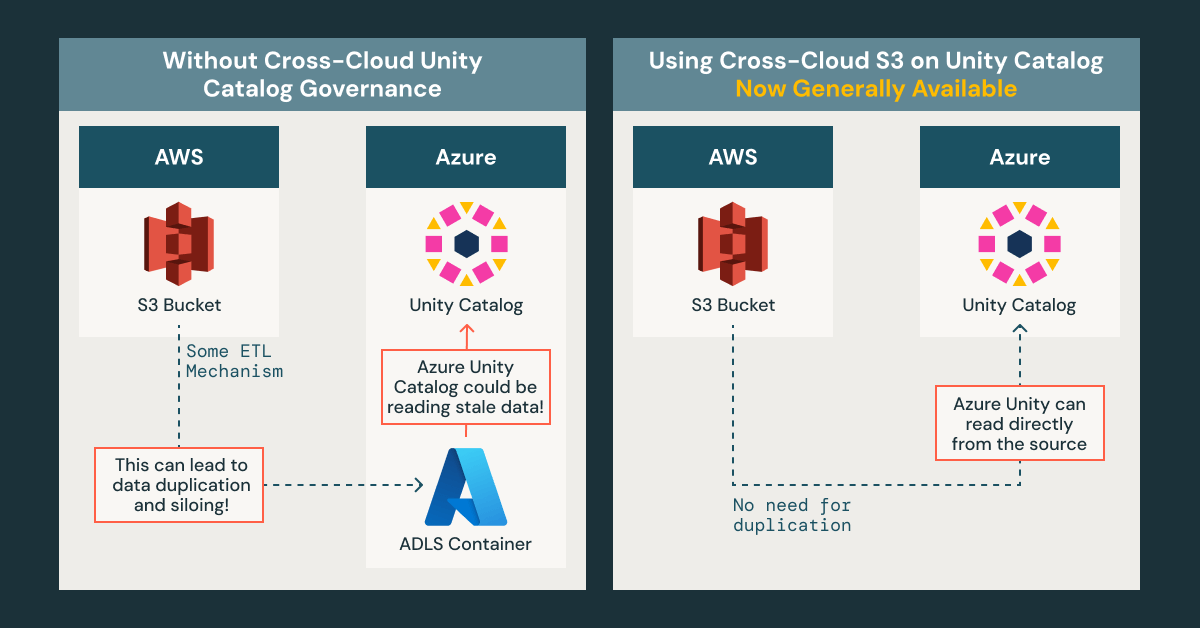

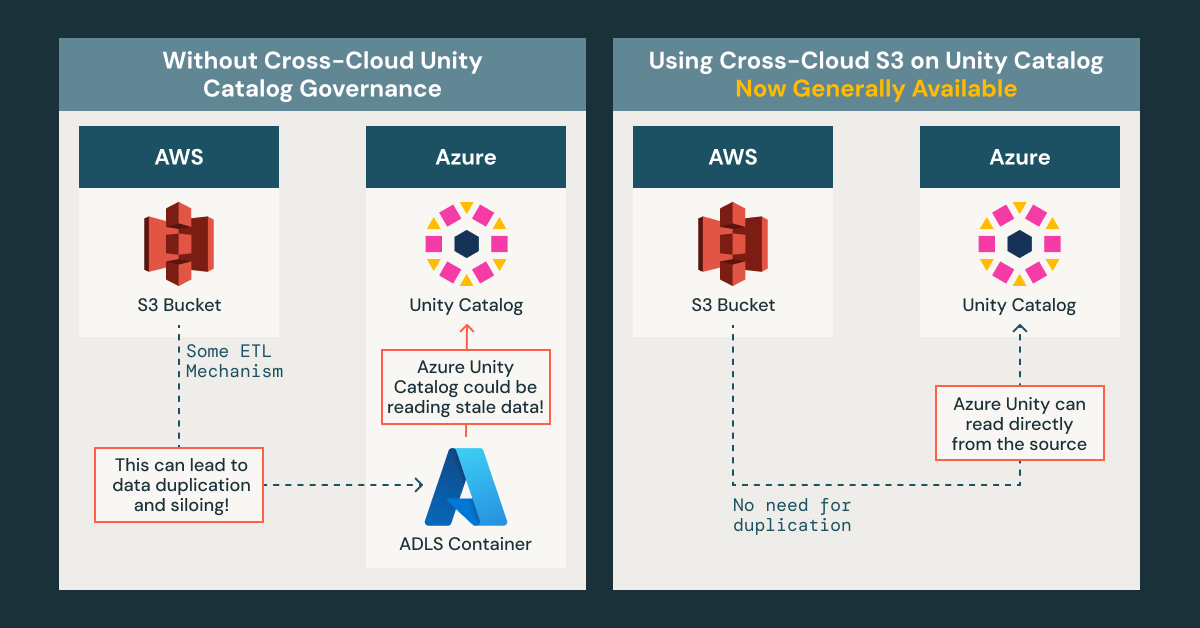

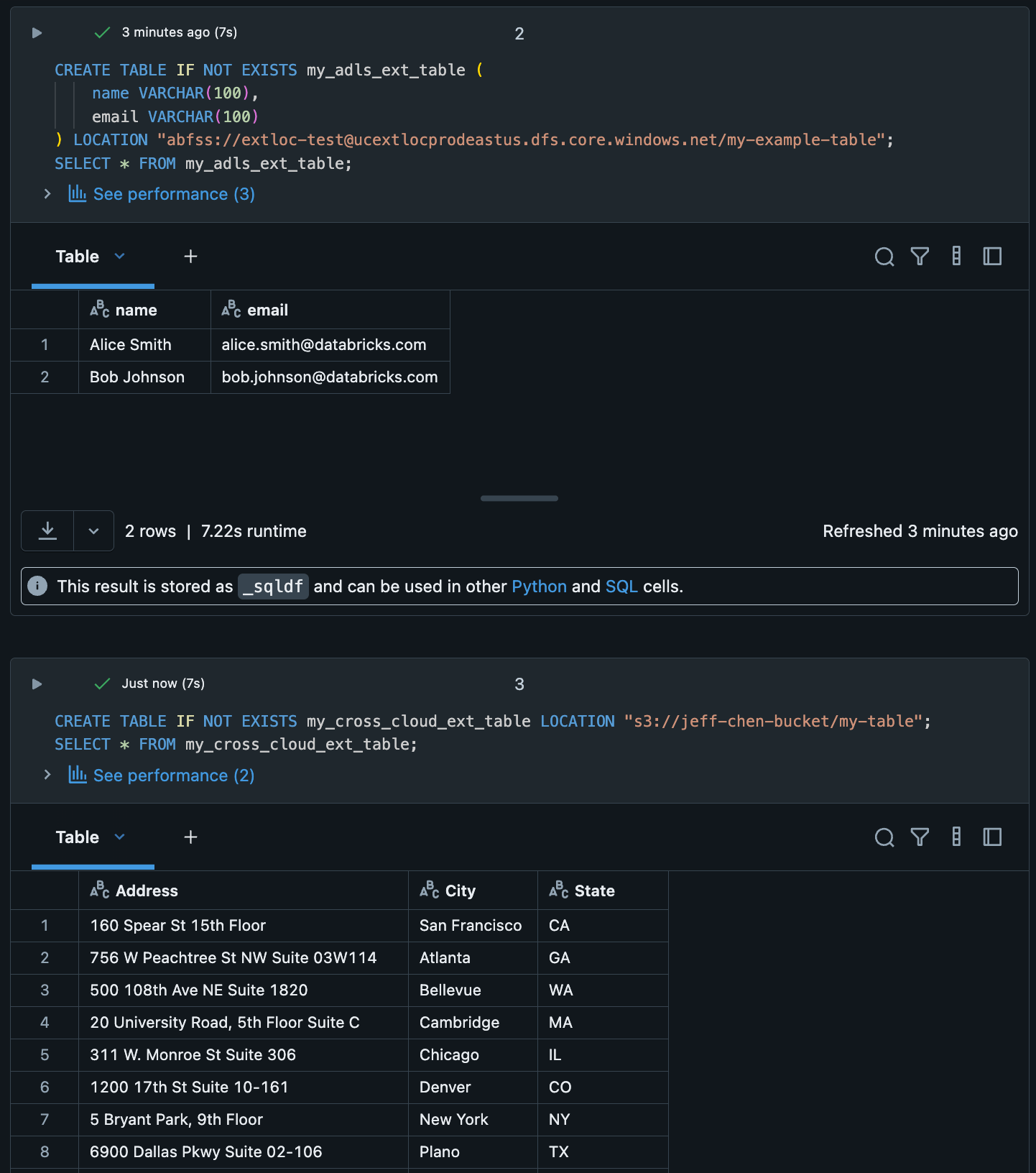

Previously, when using the Azure databricks, the Unity catalog only supported the Rent in ADLS. This meant that if you have data stored in the AWS S3 bucket, but you have to approach and process it using the Unity catalog on azure databrats, a traditional approach would require extraction, transformation and loading (ETL) that data to ADI container – time consuming. This also increases the risk of maintaining duplicate, outdated copies of data.

With this GA release, you can now set external rental Cross-Cloud S3 directly from the Unity catalog to Azure Databricks. This allows you to read smoothly and drive your data S3 without migration or duplication.

In several easy steps you can configure access to the AWS S3 bucket:

- Set the storage credential and create an external rental. Once your AWS IAM and S3 sources are secured, you can create your storage and external rental directly in Azure Databricks Catalog Explorer.

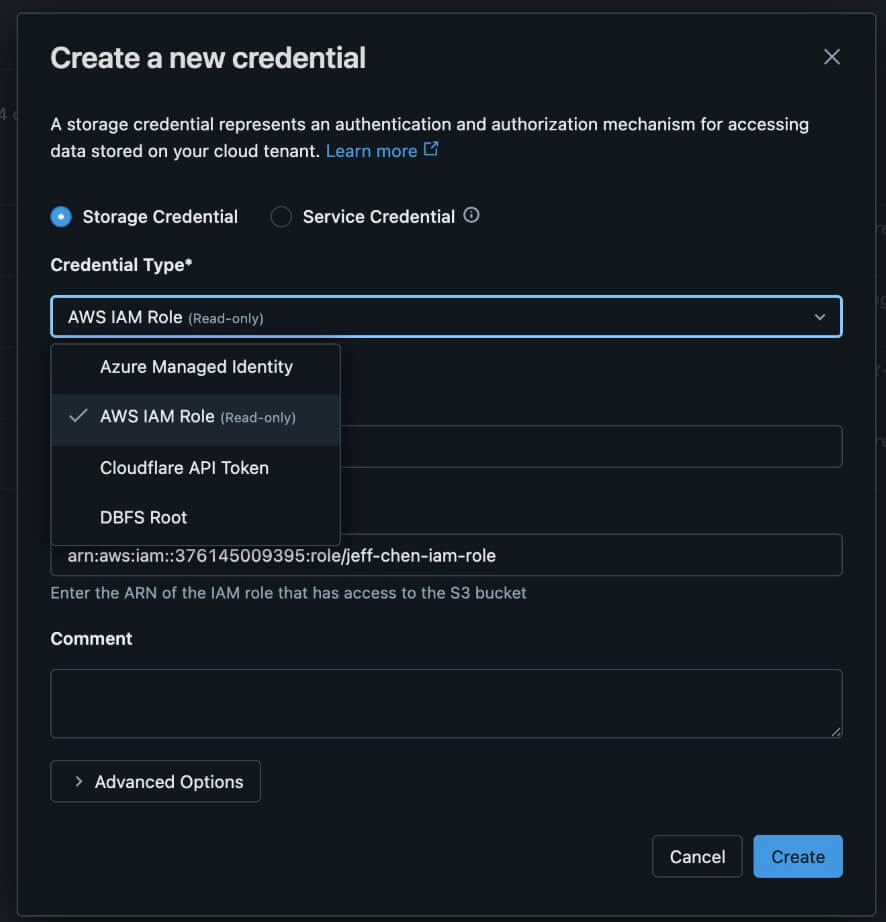

- You want to create your storage, go to Crenials Within the catalog explorer. Choose the role of AWS IAM (read only), fill in the desired fields, and add a snippet of confidence policy.

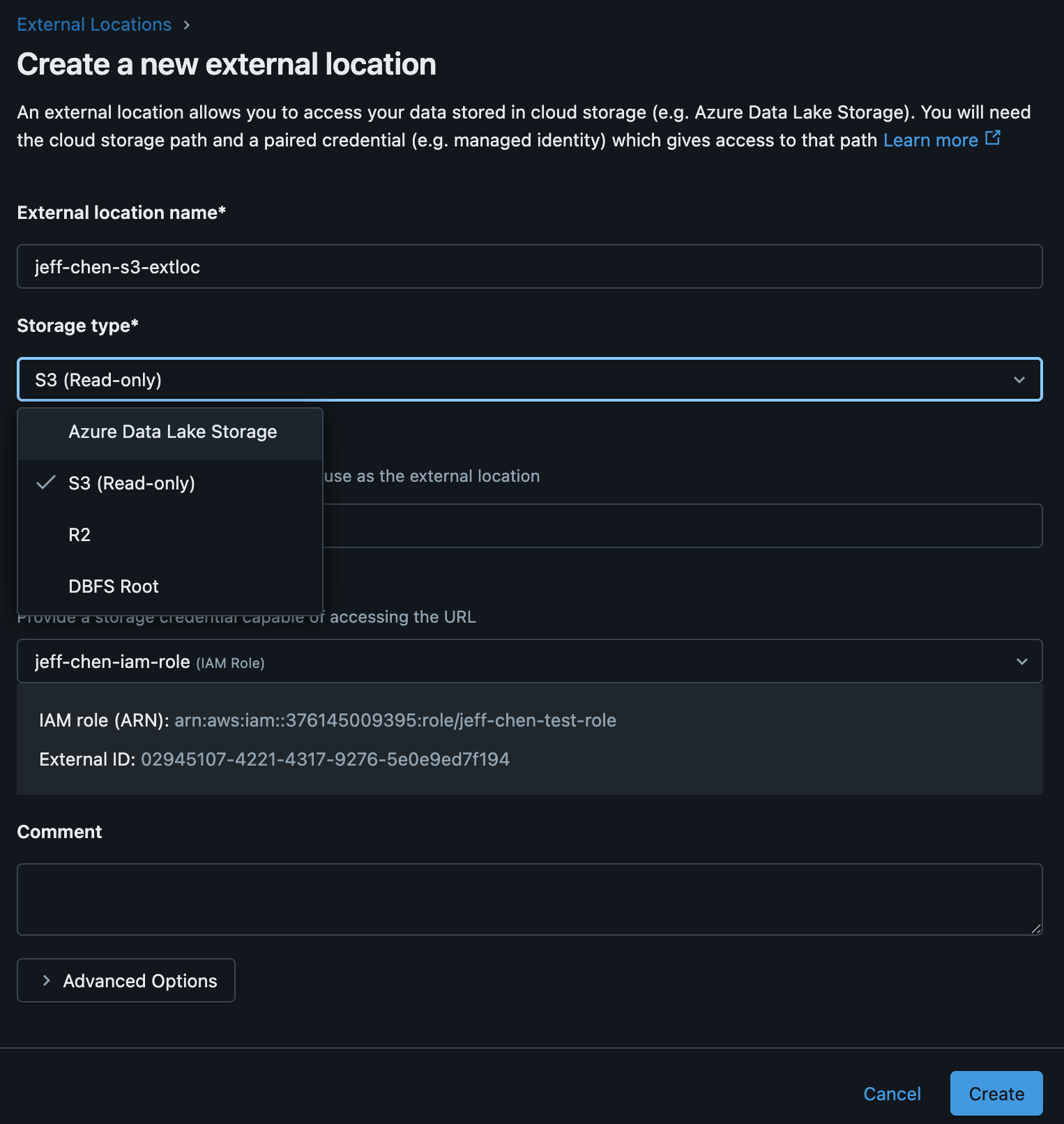

- You want to create an external rental, go to External rent Within the catalog explorer. Then select the credentials you have just set up and fill in the remaining details.

- You want to create your storage, go to Crenials Within the catalog explorer. Choose the role of AWS IAM (read only), fill in the desired fields, and add a snippet of confidence policy.

- To use permissions. On the CreditDentials page in the Catalog Explorer, you can now have your ADLS and S3 data in one place in Azure Databricks. From there you can log in across both storage systems.

3. Start the question! You are ready to ask your S3 data directly from the Azure Databricks workspace.

What is supported in GA edition?

With GA we now support access to external tables and volumes in S3 from Azure Databricks. Specifically, the following features are now supported when reading

- AWS IAM ROLE STORAGEDED

- S3 External Rental

- S3 external tables

- S3 outer volumes

- S3 Dbutils.fs Access

- Delta Data Sharing S3 from UC on Azure

We are starting

To try the Cross Cloud data on Azure Databricks, check out the documentation on how to set up Credentials for the roles of IAM For S3 storage on Azure Databricks. It is important to note that your cloud provider can retrieve fees for access to external data into their cloud services. You want to start with the Unity catalog, watch our UNITY Pro Catalog Guide Azure.

Join the catalog product and the UNITY engineering team at the data + AI summit, 9 – 12 June at the Moscon Center in San Francisco! Get a first look at late innovations in data and management AI. Sign up now and secure your place!