Introducing Sora and Video playgrounds in the Azure AI foundry

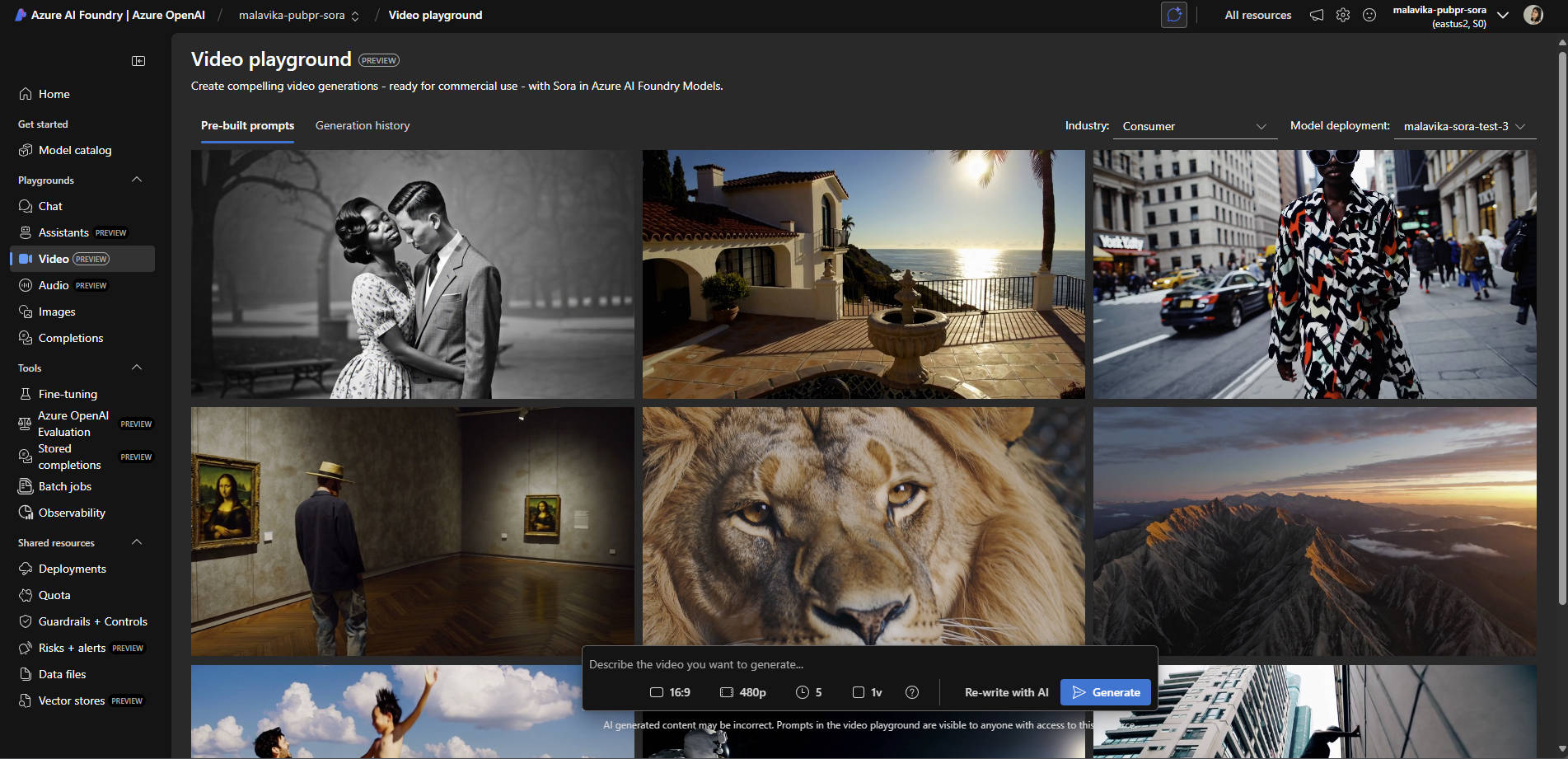

Video playgrounds in Ai Foundry is your highly faithful tested for prototyping with top video generation models similar to Sora from Azure AI Foundry prepared for commercial use. Read our blog to start the technical community on GPT-IMAGE-1 and Sora.

Modern development includes work across several systems – API, services, SDK and data models – often before you are ready to fully commit to, writing tests or spin the infrastructure. As the complexity of software ecosystems increases, the need for secure and easy verification of ideas will become. The video playground was built to suit this need.

Video Playground, purposeful purpose for developers, offers a controlled surroundings to experience with fast structures, evaluate the consistency of the model compared to fast adhesion and optimize the outputs for industry use. Whether you build video products, tools or transform your business workflows, video calls for your planning and experimenting, so you can iterate faster, bounce your workflows with certainty.

Quickly prototype from fast playback to code

Video playground offers low friction environment designed for quick prototyping, API exploration and technical validation using video generation models. Think about a playground video as your highly loyal environment that will help you build better, faster and smarter without configuration Localhost, import addiction clashes, or fear compatibility between assembly and model.

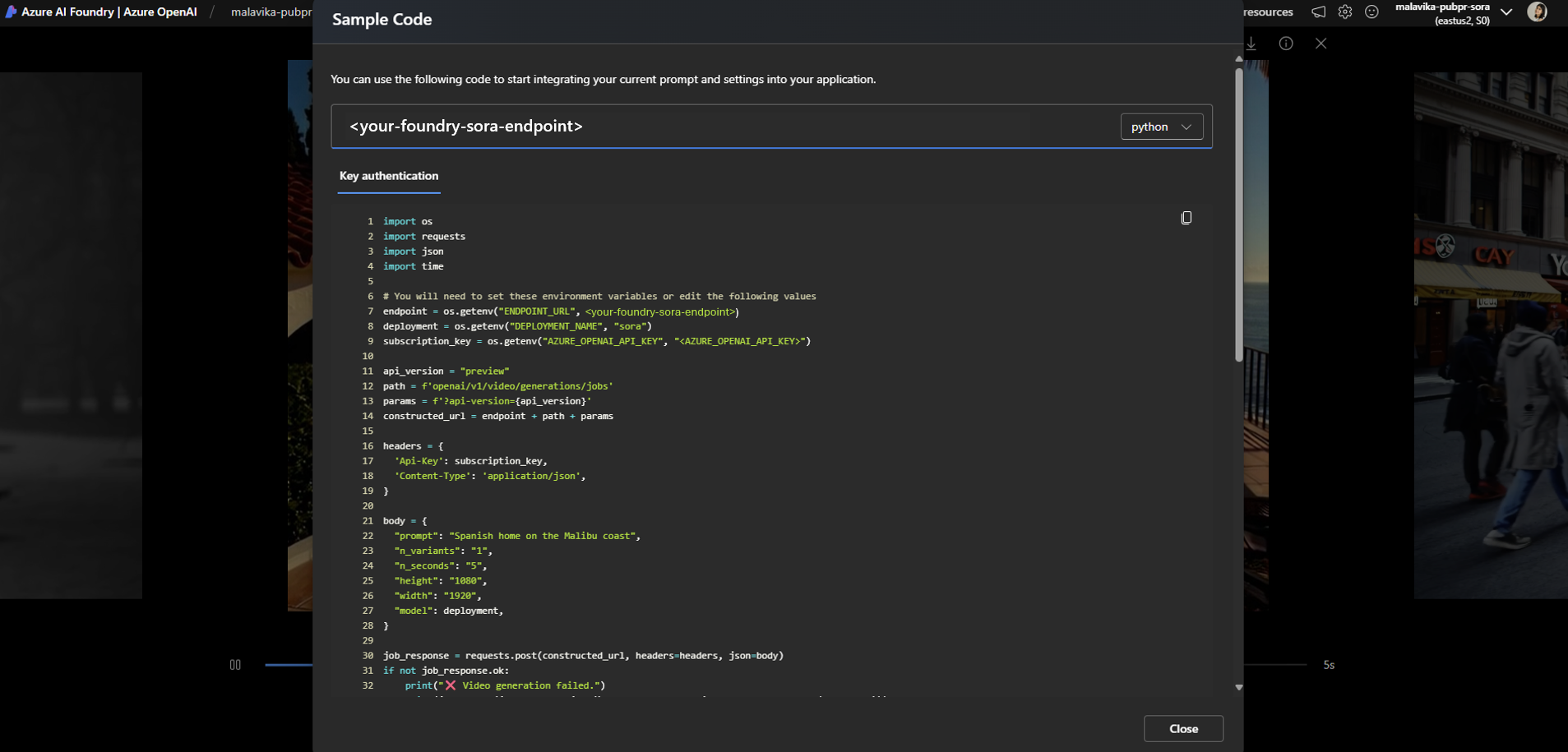

Sora Z Azure Openi is the first release for a video playground – with a model coming with its own API – a unique offer available for Azure AI foundry. Using API in VS code allows scalated development in your VS environment for your case when your initial experimentation is done on video pitch.

- Quickly iter: Experiment with text challenges and adjust the generation controls such as aspect ratio, resolution and duration.

- Fast Optimization: Tuning, tuning and rewriting AI syntax, visually compare the results across the variations with which you test, use pre -created industrial challenges and create your own fast variations available on the pitch, grouped in the model’s behavior.

- Create an API interface: Everything in the video video reflects the API of the model, so what works is projected directly into the code, with predictability and repeatability.

Function

We built video calls for developers who want to experiment with video generation. Video Playground is a full -fledged controlled environment for a highly faithful experience for model security APIs and a great demo interface for its chief product director and engineering vice president.

- Model -specific generation controls: Adjust the controls of the keys (eg APU APUère ratio, duration, resolution) to deeply understand the specific model responsive and restrictions.

- Challenging: Get inspired by how to use video generation models such as Sora for your case. There is a set of 9 curatorial videos from Microsoft on the card with a pre -created challenge.

- Port for production with multilingual code samples: In the case of Sora Z Azure Openai – this reflects the Sora API – a unique offer available to Azure AI foundry users. Help “View Code” Multiple Linning Samples of Code (Python, JavaScript, Go, Curl) For your video output, quick and generation controls that reflect the API structure. What you create on a video pitch can be easily converted to VS code so you can continue the scalated development in the VS code with the API.

- Observations side by side in the grid view: Visuelly observes outputs across rapid improvements or parameter changes.

- Integration of Azure AI content: With all the endpoints of the model integrated with the safety of Azure AI content, harmful and dangerous videos filter.

Check out the demo of these features and Sora on the video playground in our reserved escape session on Microsoft Build 2025 here.

There is no need to search, create or configure your own user interface to localhost to generate videoI hope that there will be automatic work for another state -of -the -art model or spend time solving cascading errors in assembly due to packages or changes in code needed for new models. The video playground in Azure AI Foundry provides you with access to the version. Assembly with the latest models with API updates emerged in UI.

What to test on the video pitch

When using the Playground video, when you plan your workload, consider the following because you visually match your generations:

- Translation with a challenge to move

- Does the video model interpret my challenge in a way that makes a logical and time sense?

- Is movement continuous with the described action or scene? How could I use AI rewriting to improve the challenge?

- Frame consistency

- Are there characters, objects and styles across frames?

- Are there visual artifacts, vibration or unnatural transitions?

- Scene

- How well can I control the composition of the scene, the behavior of the object or the angles of the camera?

- Can I lead scene transitions or background?

- Length and timing

- How do different challenges affect the length of video and stimulating structure?

- Do the video feel too fast, too slow or too short?

- Integration of multimodal input

- What happens when I provide a reference picture, posed data, a gold sound input?

- Can I generate a video with synchronization to a given voice?

- The needs of the post-spray

- What level of raw loyalty can I expect before I need editing tools?

- Do I have to stabilize or retract a video before use in Upscale production?

- Latency and performance

- How long does it take to generate video for different types of challenges or resolution?

- What is the compromise with the cost power of generating clips 5S vs. 15s?

Start Sora and other models on the scale using the Azure AI foundry – the necessary infrastructure. For more information, see our recent Microsoft Mechanics video that shares more about the Sora API in action:

https://www.youtube.com/watch?v=Jy7hopkfiyg

Start right away

- Login to Azure AI Foundry.

- Create a hub and/or project.

- Create the model for Azure Openai Sora from the foundry catalog or directly from the video playground.

- Prototype on video pitch; Ittero via text prompt and optimize the controls for your use.

- Finished prototype? Switch to scalated development in VS code with Sora Z Azure OpenAi API.

Create with Foundry Azure AI

- Read our technical community blog on GPT-Image-1 and Sora.

- Start and jump directly into

- Download

- Take

- Check

- Keep the conversation running and