Hearoy related to the oil and gas industry on seismic data for safe and efficiency of exploring and extracting hydrocarbons. However, the processing and analysis of large amants of seismic data can be a daunting task that requires the importance of computational resources and expertise.

Equinor, a leading energy company, has used the Databricks Platform Data Intelligence Platform to optimize one of its reconnaissance work flows of seismic data transformations that have achieved the importance of time and cost savings while improving data observation.

The aim of Equinor was to strengthen one of its 4D seismic interpretative workflows focusing on the automation and optimization of detection and reservation of reservation over time. This processes the identification of drilling goals, reduce the risk of costly dry holes and supports environmentally responsible drilling procedures. The key business expectations include:

- Optimal drilling targets: Improve your goal identification to drill a large number of new wells in the coming decades.

- Quick, cost -effective analysis: Reduce the time and cost of 4D seismic analysis by automation.

- Deper Reservoir Insights: Integrate more subsurface data to unlock improved interpretations and decision making.

Understanding of seismic data

Seismic cube: 3D of subsurface models

Seismic data collection includes the deployment of air weapons to generate sound waves that reflect pre -structures and are captured by hydrophones. These sensors found on streamers drawn by seismic blood vessels or located on the seabed, collect unprocessed data that are processed to create deitated 3D images of tall geology.

- File format: Seg -Y

- Data representation: The processed data are stored as 3D cubes and offer an understanding of the view of suburban structures.

Seismic horizons: mapping of geological borders

Seismic horizons are interpretations of seismic data, continuous surfaces representing the object. These horizons indicate geological boundaries, bound to change the properties of rocks or even fluid content. By analyzing the reflections of seismic waves at work, geologists can identify key subsurface features.

- File format: CSV – commonly used for storing interpreted data of the seismic horizon.

- Data representation: Horizons are stored as 2D surfaces.

Challenges with existing pipeline

The current seismic data pipeline processes data for generating the following key outputs:

- 4D seismic other cube: Over time, the compositions change by comparing two seismic cubes of the same physical area, usually a month or years apart.

- 4D seismic different maps: These maps contain attributes or properties from 4D seismic cubes to emphasize specific changes in seismic data and help the tank analysis.

However, several challenges limit the efficiency and scabibility of the existing pipeline:

- SUBOPTAPE DISTRIBUTED PROCESS: Related to multiple separate tasks of Python running in parallel on clusters with one knot, which leads to inefficient.

- Limited resistance: Private to failure and lacks mechanisms for error tolerance or automated recovery.

- Lack of horizontal scalabibility: High configuration nodes with substantial memory (eg 112 GB), increasing costs.

- Street with high development and maintenance: Manage and eliminate problems with pipe requirements denoting utilities.

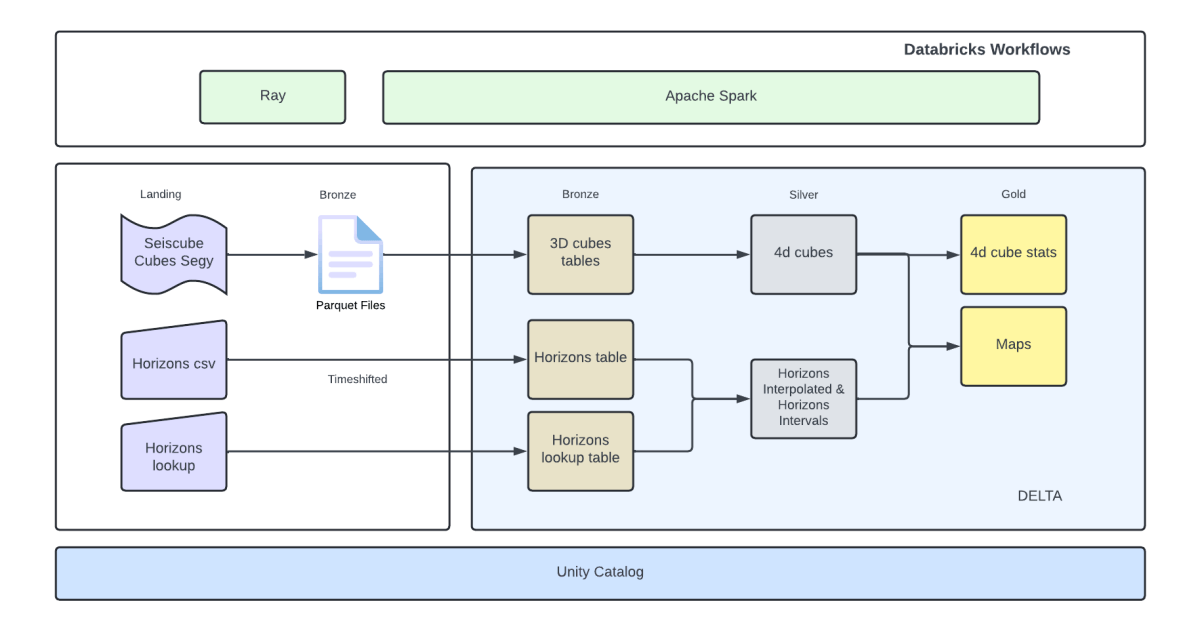

A proposed solution of architecture

To deal with these challenges, we will re -evaluate the pipes as a distributed solution using Beam and Apache Spark ™ It is governed by the Unity catalog on the databricks platform. This approach has significantly improved scalabibility, resistance and cost efficiency.

We used the following technologies on the Databricks platform to implement solutions:

- Apache Spark ™: Open framework for extensive data processing and analytics concerning an efficient and scalable calculation.

- Workflows Databricks: For orchestracting data engineering, data sciences and analytical tasks.

- Delta Lake: Open source storage layer, which ensures reliability through acid transactions, scalable handling of metadata and data processing of unified dose and streaming. It serves as a default storage format on databricks.

- Beam: The high-performance distributed computing frame that is used to scalance Python applications and allows distributed processing of seg-y files by using Segyia and existing processing logic.

- Segyio: In the Python Library for Seg-Y file processing, it allows trouble-free processing of seismic data.

Key benefits:

This redesigned seismic data pipe added inefficient in the existing pipeline while introducing scalabibility, durability and cost optimization. Key benefits are the following:

- Significant time savings: Eliminated duplicate data processing with persistent intermediate results (eg 3D and 4D dice), allowing only the necessary data sets to be reworked.

- Cost efficiency: Reduced costs by up to 96% We are specific calculation steps such as generating maps.

- Design Failure-Resolution: Use the distributed Apache Spark processing frame to introduce fault tolerance and automatic tasks.

- Horizontal scalabibility: It has achieved horizontal scalabibility to overcome the limitation of an existing solution, which ensures efficient scaling with increasing data volume.

- Standardized data format: He accepted an open, standardized data format that streamlines mining, simplify analytics, improve data sharing and strengthen management and quality.

Conclusion

This project emphasizes the great potential of modern data platforms, such as Databricks in the transformation of traditional seismic data processing procedures. By integrating tools such as Ray, Apache Spark and Delta and using the Databricks platform, we have achieved a solution that provides measurable benefits:

- Efficiency gains: Faster data processing and failure tolerance.

- Cost reduction: In a more economical approach to seismic data analysis.

- Improved Maintenance: Simplified architecture of pipelines and standardized technological piles reduced the complexity and development costs of development.

Redesignneed pipes not only optimized seismic workflows, but also determine the scalable and robust foundation for future improvements. This serves as a valuable model for other organizations aimed at modernizing their seismic data processing in leading similar business results.

Confirmation

Special thanks to Equinor Data Engineering, Data Science Communities and Equinor Research and Development Team for their contributions to this initiative.

“Excellent experience in working with professional services – very high technical competence and communication skills.

– Anton Eskov

https://www.databricks.com/blog/category/industries/energy?categories=energy