Azure Databricks is a Microsoft first page, natively integrated with Azure ecosystem to unify data and AI with high -performance analytics and deep tool support. This tight integration now contains the native Databricks JOB activity in Azure Data Factory (ADF), which makes it easier to launch Databricks’ work procedures directly within the ADF.

This new ADF activity is the best practice and all ADF and Azure Databricks users should consider the transition to this formula.

New Databricks job Activity is very simple to use:

- Drag the ADF in your ADF pipe Databricks job Screen activity

- On the Azure Databricks tab, select the Labor Databricks Workplace to verify

- You can verify using one of these options:

- Heel token

- ADF system assigned a managed identity or

- Will be assigned to the managed identity

- Although the linked service requires you to configure the cluster, this cluster is Neither created nor used When performing this activity. This is maintained for compatibility with other types of activities

- You can verify using one of these options:

3. On the Settings tab, select the Databricks workflow to make a job drop -down list (you will see only the tasks to which it has verified main access). Under the task parameters below, configure the task parameters (if existed) to ship to the Databricks workflow. You want to know more about the databricks task parameters please check documents.

- Note that the tasks and tasks can be configured with dynamic content

That’s all that is to do it. ADF will start your workflow Databricks and return the Task ID and URL. ADF then completes the work. Read more below to find out why this new formula is an immediate classic.

Bringing Databricks Databricks from ADF allows you to get more horsepower from your Azure Databricks Investment Investment

Using the Azure Data Factory and Azure Databricks factory together was a model of GA sincere 2018 when it was issued with it Blog post. Since then, integration has been the basis for Azure customers who watched the primary this simple formula:

- Use ADF to land data in Azure storage via your 100+ connectors using integration for private or previous connection

- Orchestrate Databricks Notebooks through Native Databricks Activity Notebook to implement scalable data transformation in databricks using Delta Lake tables in AdLS

Although this formula has been very valuable over time, it has limited customers to the following ways of operation that robs them for the full value of the databricks:

- Using all purpose calculation to start jobs to introduce start time with cluster -> run into noisy adjacent problems and pay for all purpose calculation for automated tasks

- Waiting to start a cluster to perform a notebook when using Compute -> Classic clusters are spun on the laptop, causing the cluster start time, even for DAG notebooks

- Pool Management to shorten the start time of cluster of jobs -> pools can be a difficult manager and can often lead to paying for virtual computers that are not used

- Using the too permissible pattern of permissions for integration between ADF and Azure Databricks -> Integration requires a workspace administrator or Clast Cluster Permission

- No ability to use new features in databricks such as databricks SQL, DLT or without server

While this pattern is scaling and native from the Azure Data Factory and Azure Databricks, the tools and abilities that it offers, the same have been the same since its launch in 2018, even though the Databricks has grown and has been bounded to a data intelligence platform.

Azure Databricks exceeds traditional analytics and delivers a unified platform for data news on Azure. It combines Lake -Lake -AI -built AI and advanced governor architecture to help customers unlock information faster, at lower costs AS and business security. The key skills include:

- OSS and open standards

- Catalog of Lake High in the field via UNITY Catalog for data security and AI across code, languages and calculations inside and outside Azure Databricks

- Best performance in their class and price performance for ETL

- Built -in capabilities for traditional ML and Genai included LLM with fine fine -tuning using basic models (including Claude Sonet), application of building agents and model serving

- The best DW class on Lakehouse with databricks sql

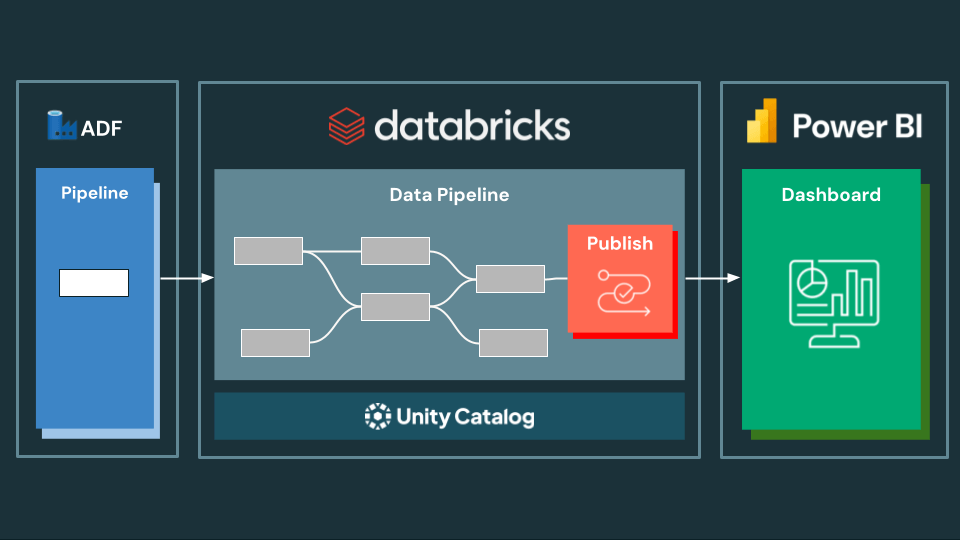

- Automated Publishing and Integration with Power Bi while publishing to power the functionality of BI found in the Unity and Workflows catalog

With the release of the native Databricks Job Activity in the Azure Data factory, customers can now carry out workflows for databricks and pass on tasks parameters. This new formula not only solves for the above limitations, but also allows the use of the following functions in the databrics that were No Previously available in ADF as:

- Programming DAG tasks inside databricks

- Using integration SQL databricks

- DLT pipeline

- Using DBT integration with SQL stock

- Using the classic reuse of cluster to shorten the cluster start time

- Using the Calculation of Tasks Without Server

- Standard databricks functionality of workflow, such as running as, task values, conditional implementation, such as/elsewhere and for each, AI/BI task, repair, notifications/alerts, GIT integration, DABS support, built -in line, queues and competitive runs and much more …

Most importantly, customers can now use ADF Databricks’ work activity to use Publish for Powering BI tasks in databricks workflowswhich automatically publishes semantic models on Power Bi from schemes in Unity catalog and starts import if there are tables with storage modes using or dual (set instructions documentation). Demo on Power Bi Tasks in Databricks here. To see that Power Bi on Databricks Best Practices Cheat Sheet – A brief, action guide that helps teams to configure and optimize their performance, cost and user reports from the beginning.

Databricks work activity in ADF is the new best procedure

Help Databricks job Activity in Azure Data Factory To start workflows Databricks, the new integration of proven procedures when using these two tools. Customers can immediately start using this formula to use all capacities in the Databricks Platform Data Intelligence Platform. For customers using ADF using ADF Databricks job Activity will lead to immediate business value and cost savings. Customers with ETL who use notebook activity Databricks job Activity and prefer this initiative in your plan.

Start with 14 -day trial version free Azure databricks.